|

I began this post wanting to share something about the amazing technologies that are surfacing for 3D character development. As technologies are always changing it is likely that this post may quickly age or also that I've missed a recently available or coming soon technology. Please don't hesitate to comment and let me know of such new tech. Some of this is not exactly new but may have recently come of age. The intent is to share the barriers being broken and to consider what an advanced 3D character development pipeline should look like and which technologies it should include. Photo Scanning You can't help but love the detail and image map quality that you get from a tricked out Photo Scan. And although it would be rather expensive to assemble one... I would love to have a Photo Scanning stage and the Pro Version of Agisoft PhotoScan (like the example below of James' rig at ten24). Oh and while I'm asking I'd like his experience as well.

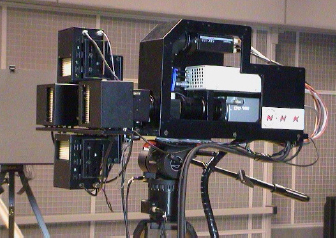

Be sure and watch the awesome piece that the scans were used on! Spacial Phase Imaging While PhotoScanning is taking the 3D world by storm there is still more storming of industries to come. Over the years I have continued the pursuit of a side passion which is depth-mapping cameras. Before there was a Microsoft Kinect I was visiting NHK's R & D department in Japan to see their Axi-vision Depth mapping camera (images below). At the time there were few vendors who even offered a depth-mapping solution and even fewer who could do it well. While NHK's camera was amazing, it also was rather expensive at $400k. But it was Microsoft who purchased a little Israeli company and began Project Natal which eventually would become the Kinect. All of a sudden the world was discovering depth mapping and the ramifications were very exciting. But I never wanted a below 640 resolution device which could only be operated indoors. I wanted a robust camera capable of great fidelity. In my searching I came across this company (Photon-X) about a year or two ago. They created a Spacial Phase Imaging (SPI) sensor. In more common language, a camera sensor that captures surface normal information which is then processed into a 3d model. The fidelity is fantastic and appears to be (according to conversations with the company) fairly robust in outdoor lighting. Unfortunately this one is currently still buried behind IP development and other more lucrative industries at the moment. But I fully intend to see this style sensor take over all the ways we image in the coming years. This is how the future PhotoScanning will go from 140 or so photos to resolve a fantastic human model to five or six photos taken with a SPI sensor. This is also the sensor technology that will bring us fully accurate 3D performances in a frame-by-frame styled 3D model sequence. www.photon-x.com Scans Over Time

But what I've actually been looking forward to is a way to actually capture a model for every frame kind of how actual film simply recording the subject in each moment. Perhaps I could use the 4D features of Agisoft but that's a lot of data. What I need is a translation tool for distilling all that data down to something more flexible. It would need to be a kind of hybrid between Motion Capture and PhotoScanning. Well, here is an awesome group what has been working on just such an impressive solution. They call this part of it MoSh. If you were to get Spacial Phase Imaging sensors involved and marry this research with it... well, you'd have my dream solution. http://ps.is.tuebingen.mpg.de/project/MoSh Eyes Alive One of the long standing downfalls in 3D people is that their eyes tend to look dead and somehow less active than a real person. This awesome study below shows off some of Disney's research in creating highly accurate 3D eyes with accurate iris reactions to lighting changes. Can I please get this as a plugin tomorrow? Yes, I am willing to wait all the way until tomorrow! http://www.disneyresearch.com/publication/high-quality-capture-of-eyes/ Hair & Growth Sure you can place the hair by hand onto your 3D character but if you need an entire cast by weeks end, perhaps you'll need to turn to a more sophisticated pipeline. The results here are fantastic. While this is not a product that you can simply grab off the shelf it certainly should be and makes my list of an ideal pipeline/workflow for building 3D characters. https://graphics.ethz.ch/publications/papers/paperBee12.php While that's great for facial hair and the like it still leaves a lot to be desired for the female character creation. This fantastic research shows off a process by which Disney Research is "regrowing" hair to match an input image. Very exciting tech. https://www.youtube.com/watch?v=QCgWMIYGbV8 Sticky Lips I have been frustrated with this dynamic missing from 3D humans for a while and it answers the question, "Why do the creases of CG humans look wrong?" One of the answers is because they are not sticking. Real lips are sticky! Go look at yourself in the mirror and open your mouth slowly. Watch how your lips stick together. That doesn't happen in CG (remember nothing happens unless you make it so) and it makes the mouth creases of a CG character look inaccurate (at least I hope that is all). Haha! Can I PLEASE get a script like this for Cinema 4D? https://vimeo.com/5006381 Hand Animation This was one of those moments when I discovered a product (the Leap Motion device) and then looked around and asked, "Why isn't anyone using this for hand animation?" And happily for me someone brilliant showed up and created Brekel Pro Hands. A fantastic implementation of hand animation recording with a Leap Motion controller. ConclusionWhile these various advances or unique approaches to the challenge of 3D human character development are exciting most are barely available for implementation or simply have unavailable IP. What can be said is that 3D human character development is coming of age and the marriage of synthetic characters is becoming indistinguishable from real humans. But what about digital Ira or that CG guy in Tron or all those other problematic attempts that I've seen that didn't quite hit the mark all the way? Yeah, I know. But it's just about here. The final details will be worked out in labs away from public view and we'll quietly stop knowing when we saw a 3D human. And the artist will be able to reach that lofty goal much more cost effectively than ever before. Synthetic film-making is upon us! Below I've included some of the other neat 3D products which are also a part of these pipelines but didn't seem to fit my post exactly. Enjoy! Dynamic Ragdoll There are some things you simply can not capture with motion capture (i.e.-being hit by a car... okay you kind of can I remember shooting footage with yank harnesses back in college at speeds up to 25 mph, not the point though). There are actions and falls with are simply not worth it to have a performer perform. This is where ragdoll physics would come in to play, but people do not magically stop thinking and acting for themselves as soon as they get hit by a force (flashback to those old movies where an incredibly limp dummy is thrown off a cliff or hit by a car). This is where Natural Motion's Endorphin comes in.

Facial Performance FaceShift www.faceshift.com WardrobeSince our synthetic films will not be populated with nude characters. http://www.marvelousdesigner.com/learn/md4

2 Comments

michael

4/11/2015 11:22:11 pm

Hi!

Reply

Leave a Reply. |

Permissions & CopyrightsPlease feel free to use our 3D scans in your commercial productions. Credit is always welcome but not required.

Archives

April 2018

Daniel

Staying busy dreaming of synthetic film making while working as a VFX artist and scratching out time to write novels and be a dad to three. Categories

All

|

|

Know and make known.

|